38 nlnl negative learning for noisy labels

SIIT Lab - sites.google.com Youngdong Kim, Junho Yim, Juseung Yun, and Junmo Kim, "NLNL: Negative Learning for Noisy Labels" IEEE Conference on International Conference on Computer Vision (ICCV), 2019. Posted Aug 15, 2019, 10:47 PM by Chanho Lee We have a publication accepted for IET Journal. Ji-Hoon Bae, Junho Yim and Junmo Kim, "Teacher-Student framework-based knowledge ... NLNL: Negative Learning for Noisy Labels | Papers With Code Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

Joint Negative and Positive Learning for Noisy Labels NLNL further employs a three-stage pipeline to improve convergence. As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we...

Nlnl negative learning for noisy labels

【今日のアブストラクト】 NLNL: Negative Learning for Noisy Labels【論文 DeepL 翻訳】 NLNL: Negative Learning for Noisy Labels Abstract 訳文 畳み込みニューラルネットワーク (CNNs)は, 画像分類に用いると優れた性能を発揮する. 従来の CNNs の学習方法は, "入力画像はこのラベルに属する" (正の学習 (Positive Learning); PL) のように, 教師付きの方法で画像にラベルを付ける方法であり, ラベルがすべての画像に正しく割り当てられていれば, 高速かつ正確な学習が可能である. しかし, 不正確なラベルやノイズの多いラベルが存在する場合, PL を用いた学習は間違った情報を提供してしまい, 性能を著しく低下させる. Joint Negative and Positive Learning for Noisy Labels - SlideShare 従来手法 4 正解以外のラベルを与える負の学習を提案 Negative learning for noisy labels (NLNL)*について 負の学習 (Negative Learning:NL) と呼ばれる間接的な学習方法 真のラベルを選択することが難しい場合,真以外をラベルとして学習す ることでNoisy Labelsのデータを ... [1908.07387] NLNL: Negative Learning for Noisy Labels - arXiv.org NLNL: Negative Learning for Noisy Labels Youngdong Kim, Junho Yim, Juseung Yun, Junmo Kim Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification.

Nlnl negative learning for noisy labels. NLNL: Negative Learning for Noisy Labels - arXiv Vanity Finally, semi-supervised learning is performed for noisy data classification, utilizing the filtering ability of SelNLPL (Section 3.5). 3.1 Negative Learning As mentioned in Section 1, typical method of training CNNs for image classification with given image data and the corresponding labels is PL. NLNL-Negative-Learning-for-Noisy-Labels/main_NL.py at master ... - GitHub NLNL: Negative Learning for Noisy Labels. Contribute to ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels development by creating an account on GitHub. PDF NLNL: Negative Learning for Noisy Labels - arXiv The combination of all these methods is called Selective Negative Learning and Positive Learning (SelNLPL), which demonstrates excellent performance for filtering noisy data from training data (Section3.4). Finally, semi-supervised learning is performed for noisy data clas- sification, utilizing the filtering ability of SelNLPL (Sec- tion3.5). 3.1. Joint Negative and Positive Learning for Noisy Labels | AITopics Training of Convolutional Neural Networks (CNNs) with data with noisy labels is known to be a challenge. Based on the fact that directly providing the label to the data (Positive Learning; PL) has a risk of allowing CNNs to memorize the contaminated labels for the case of noisy data, the indirect learning approach that uses complementary labels (Negative Learning for Noisy Labels; NLNL) has ...

loss function - Negative learning implementation in pytorch - Data ... Let's call the latter a "negative" label. An excerpt from the paper says (top formula is for usual "positive" label loss (PL), bottom - for "negative" label loss (NL): ... from NLNL-Negative-Learning-for-Noisy-Labels GitHub repo. Share. Improve this answer. Follow answered May 8, 2021 at 17:55. Brian ... Deep Learning Classification With Noisy Labels | DeepAI It is widely accepted that label noise has a negative impact on the accuracy of a trained classifier. Several works have started to pave the way towards noise-robust training. ... [11] Y. Kim, J. Yim, J. Yun, and J. Kim (2019) NLNL: negative learning for noisy labels. ArXiv abs/1908.07387. Cited by: Table 1, §4.2, §4.4, §5. Joint Negative and Positive Learning for Noisy Labels NLNL further employs a three-stage pipeline to improve convergence. As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage. NLNL: Negative Learning for Noisy Labels | IEEE Conference Publication ... Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

NLNL: Negative Learning for Noisy Labels | Request PDF Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method... NLNL: Negative Learning for Noisy Labels - Semantic Scholar A novel improvement of NLNL is proposed, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage, allowing greater ease of practical use compared to NLNL. 5 Highly Influenced PDF View 5 excerpts, cites methods Decoupling Representation and Classifier for Noisy Label Learning Hui Zhang, Quanming Yao ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels - GitHub NLNL: Negative Learning for Noisy Labels. Contribute to ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels development by creating an account on GitHub. NLNL: Negative Learning for Noisy Labels - CORE Reader NLNL: Negative Learning for Noisy Labels - CORE Reader

ICCV 2019 Open Access Repository Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

P-DIFF+: : Improving learning classifier with noisy labels by Noisy ... Learning deep neural network (DNN) classifier with noisy labels is a challenging task because the DNN can easily over-fit on these noisy labels due to its high capability. In this paper, we present a very simple but effective training paradigm called P-DIFF+ , which can train DNN classifiers but obviously alleviate the adverse impact of noisy ...

PDF Negative Learning for Noisy Labels - UCF CRCV Label Correction Correct Directly Re-Weight Backwards Loss Correction Forward Loss Correction Sample Pruning Suggested Solution - Negative Learning Proposed Solution Utilizing the proposed NL Selective Negative Learning and Positive Learning (SelNLPL) for filtering Semi-supervised learning Architecture

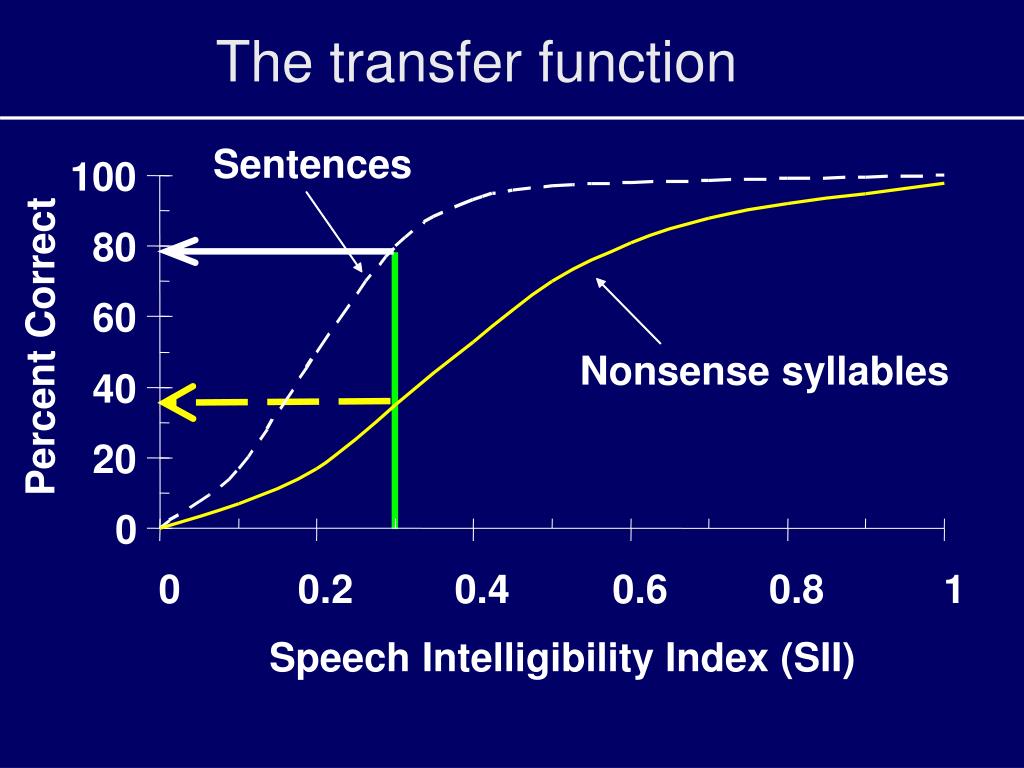

PDF Learning from Large-Scale Noisy Images - webpages.uncc.edu NLNL: Negative Learning for Noisy Labels, ICCV 2019 Conceptual comparison between Positive Learning (PL) and Negative Learning (NL). Regarding noisy data, while PL provides CNN the wrong information (red balloon), with a higher chance, NL can provide CNN the correct information (blue balloon) because a dog is clearly not a bird.

Handling Noisy Labels for Robustly Learning from Self-Training Data for Low-Resource Sequence ...

Joint Negative and Positive Learning for Noisy Labels - Semantic Scholar This paper proposes a training strategy to identify and remove modality-specific noisy labels dynamically, which sort the losses of all instances within a mini-batch individually in each modality, then select noisy samples according to relationships between intra- modal and inter-modal losses. PDF View 1 excerpt, cites methods

Research Code for NLNL: Negative Learning for Noisy Labels However, if inaccurate labels, or noisy labels, exist, training with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this ...

NLNL-Negative-Learning-for-Noisy-Labels/main_NL.py at master · ydkim1293/NLNL-Negative-Learning ...

NLNL: Negative Learning for Noisy Labels - computer.org Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in

《NLNL: Negative Learning for Noisy Labels》论文解读 - 知乎 0x01 Introduction最近在做数据筛选方面的项目,看了些噪声方面的论文,今天就讲讲之前看到的一篇发表于ICCV2019上的关于Noisy Labels的论文《NLNL: Negative Learning for Noisy Labels》 论文地址: …

GitHub - ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels: NLNL: Negative Learning for Noisy Labels

PDF NLNL: Negative Learning for Noisy Labels - CVF Open Access Meanwhile, we use NL method, which indirectly uses noisy labels, thereby avoiding the problem of memorizing the noisy label and exhibiting remarkable performance in ・〕tering only noisy samples. Using complementary labels This is not the ・〉st time that complementarylabelshavebeenused.

Post a Comment for "38 nlnl negative learning for noisy labels"